I came across AutoML while exploring some data science use cases we were tackling and hence I explored it for some time. Here are some notes related to Google Cloud - AutoML from a developer perspective. At the tail end shall also share some thoughts around how this ecosystem is shaping up.

Programming Model vs Machine Learning

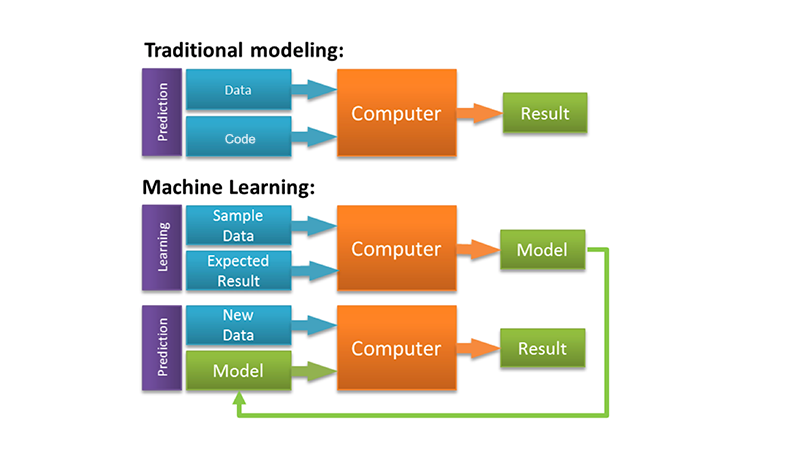

As a developer and before diving deeper into data science I would always wonder how Machine Learning is different than traditional programming. I’m going to simplify it a bit to make the difference a bit apparent.

Programming Model

We write some rules (code), receive input data and generate output. It’s a fairly established model for us to think about.

Machine Learning

We start with sample data (training set) and expected results to generate a model. Once we have a model we can give it the input data and generate output. The primary difference being that we don’t write rules to generate output - our model learns the rules on its own looking at the sample data and expected results. Based on the type of available sample data there are different types of learning - supervised, unsupervised, semi-supervised and reinforcement learning.

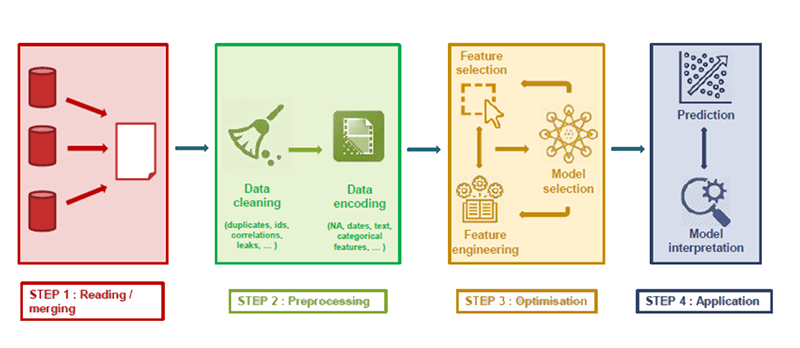

Lifecycle of a Machine Learning Project

To fully understand the value that an AutoML system brings to the table one has to understand the lifecycle of a typical Machine Learning project.

Image Source: 1

Image Source: 1

Step 1 & 2: This is largely about making the data ready in a consumable format. Most of this has to be done manually whether you are doing - traditional programming, ML project or even AutoML.

Step 3: This is where most of the actual time is spent and it involves a variety of skills from your arsenal around the art and science of machine learning. Most of the time it involves you to go back and forth with your data to ensure you are working with the right set of features and model. This along with the step 4 is time consuming. Also, you can read more about Feature engineering and Feature selection here.

Step 4: Based on the trained model created using the features and from Step 3 and sample (training) data - we need to run the model on test data and interpret the predicted results. Google cloud has a very good primer on evaluating the results here

Where does AutoML fit?

AutoML is aimed at automating functional aspects around - Data encoding, Feature engineering / selection, Model selection, Evaluating Predictions and Model Interpretation. There are frameworks which also try to automate some other aspects too.

Here are links to some of these frameworks:

Forecasting using Cloud AutoML

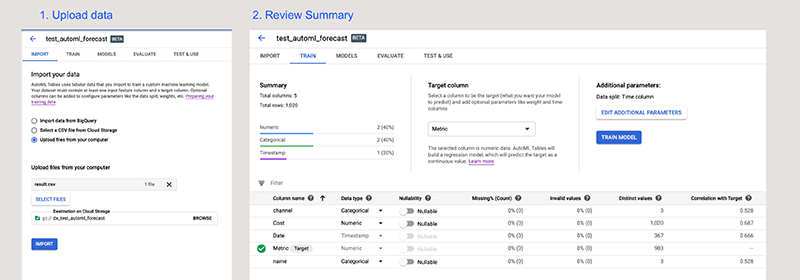

The data loading and getting started for Cloud ML was a breeze.

You upload the data in cloud storage and then Cloud ML imports it. Post import we see some key aspects about our data along with some manual controls to tweak the “Data type” which gets auto detected. Once we choose the Target Column - it’ runs a corelation analysis to figure out which columns matter. Also, shows some additional stats around the data values.

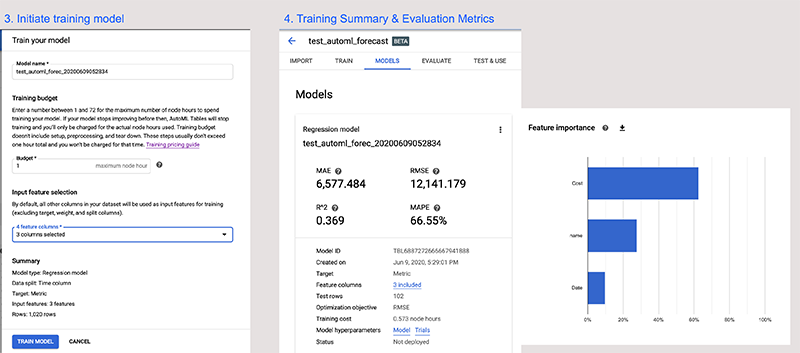

After choosing a Target Column you can initiate training model. While initiating the training - you can choose the number of hour (budget) and the feature columns. Depending on whether the Target column is a numeric value or a dimension - CloudML auto-picks a model type.

Once the training finishes - Cloud ML shows a summary and detailed analysis of the summary and evaluation metrics depending on the training model. Considering our Target Metric is Clicks - it’s used a Regression Model. Also, it’s determined the respective feature importance based on the Training Data.

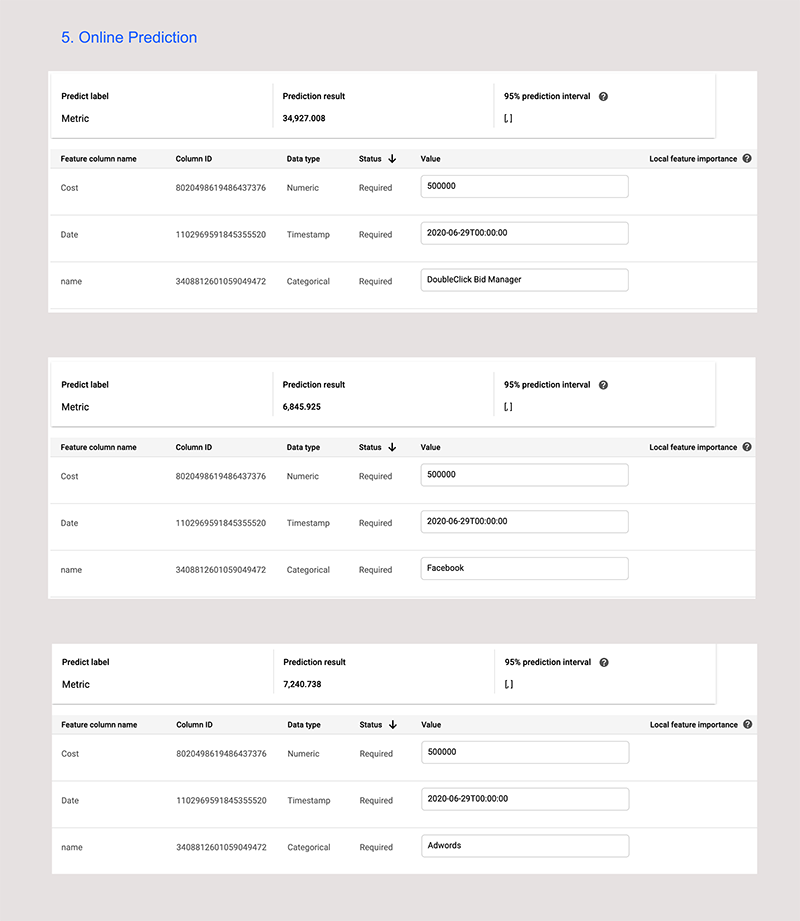

Considering it’s an integrated cloud offering you can also deploy the model and see live online predictions. Above I have experimented trying to predict the number of ‘Clicks’ I shall receive for a budget of 500,000 on 29th June, 2020 for the respective channels - Adwords, Facebook and DBM.

Closing Thoughts

Cloud AutoML is extremely powerful and more importantly has smart defaults around being able to build a robust ML model. It’s integration with Google Cloud ensures that you are able to quickly deploy the same model and consume it as an API. One can also export the model and run it on your own infrastructure by hosting it on Docker.

Data science is equal parts an art and a science. AutoML is making ML accessible and tackling the scientific bits around building a ML model. Data scientists will still be needed to fill in the artistic side of things until AI takes over in the near or distant future.

Image Source:

Image Source: